Spacy and Markovify for Maximum Hilarity

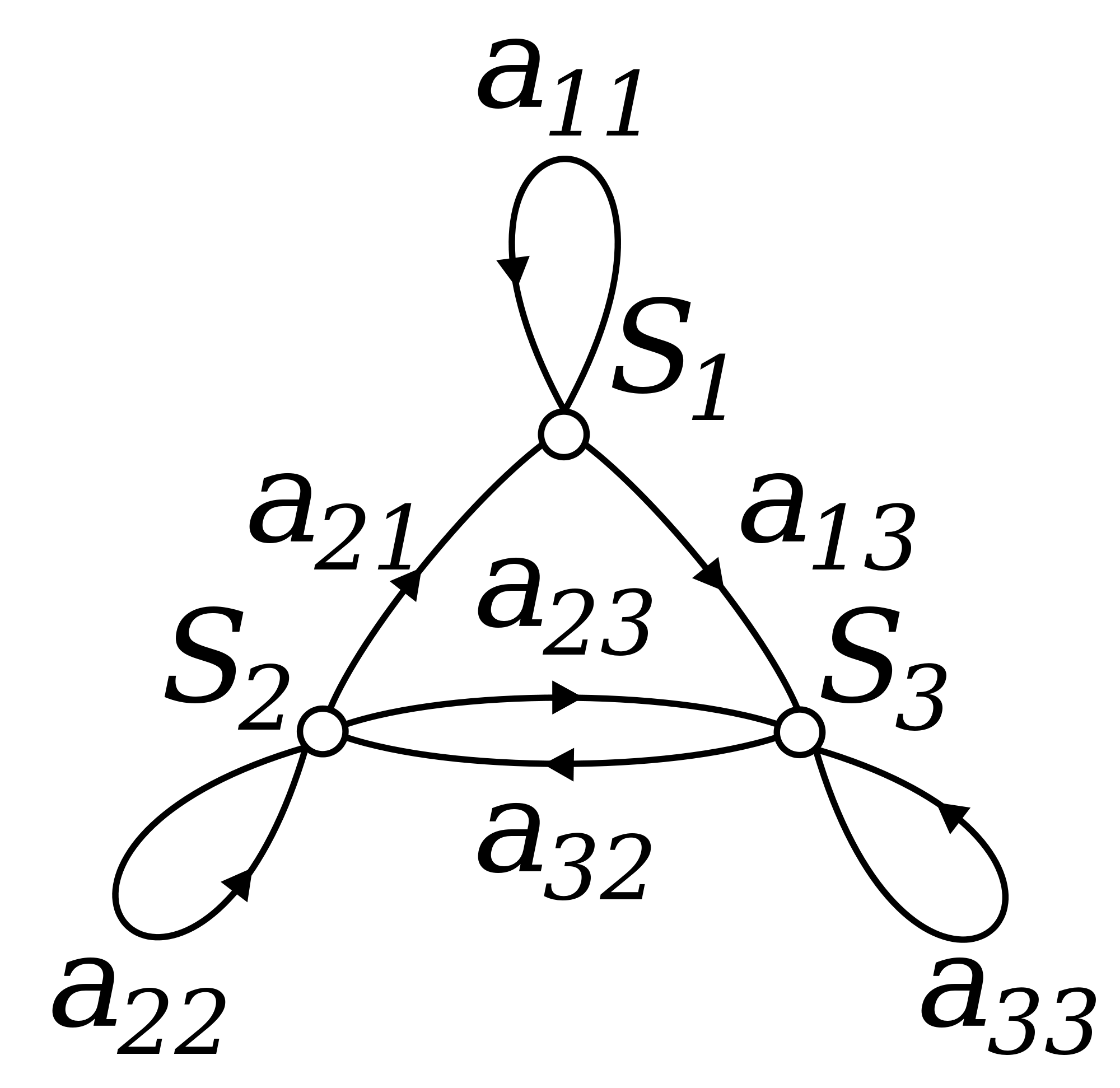

I'm a big fan of jsvine's Markovify libary. Markovify is a python library that allows you generate markov chain text based on sentence delimited text datasets (so basically normal prose). You can feed it anything from classical novels to internet forum comments and it will spit out semi-related nonsense that almost passes as a sentence.

The library gathers ngrams from training texts and builds a dictionary that catalogues the frequency of proceeding ngrams. It is at the core of the script used to make posts and comments to the Subreddit Simulator subreddit. I've taken a crack at making a reddit bot that responds to comments and mentions with sentences based on a model of all comments in the reddit Shanghai subreddit.

Recently I've taken an interest in NLP and have found the Spacy python library. Spacy offers "industrial-strength natural language processing" (improved parse speed and accuracy).

One of a number of useful tools based on Spacy is Textacy, a library for performing common types of aggregation and post-processing on NLP-parsed text. Textacy offers an interface for storing ordered dicts of Spacy docs as binaries to disk. This allows you to work with preparsed text and allows you to do the computationally and time expensive task of NLP parsing before performing post-processing tasks. These collections (corpora) can also contain metadata about docs (author, create time, etc), making it useful for breaking markov training text into relevant sub-categories.

In the advanced usage section of Markovify's docs, there is an example of how to make a class based on the Markovify.Text class, using NLTK for parts-of-speech tagging. This can help improve the coherence of the output sentences by controlling for homonyms in ngram splitting. However, the docs warn that the tagging process can slow down the generation of a markov model.

So to speed up the creation of the markov model with POS tagging, we can store the pre-parsed model text as Textacy corpora and load them at run-time. By overriding some of the methods in the markovify.Text class, we can load docs from a corpus (using Spacy's built-in sentence breaking) and split words into tokenized strings with "::"-delimiting the token's orthographic value and its part of speech.

Here's an example of how you can integrate Markovify with Spacy and Textacy:

import markovify

import textacy

import re

from unidecode import unidecode

corpus = textacy.Corpus.load('/path/to/corpus/',

name="corpus_name", compression='gzip')

class TaggedText(markovify.Text):

def sentence_split(self, text):

"""

Splits full-text string into a list of sentences.

"""

sentence_list = []

for doc in corpus:

sentence_list += list(doc.sents)

return sentence_list

def word_split(self, sentence):

"""

Splits a sentence into a list of words.

"""

return ["::".join((word.orth_,word.pos_)) for word in sentence]

def word_join(self, words):

sentence = " ".join(word.split("::")[0] for word in words)

return sentence

def test_sentence_input(self, sentence):

"""

A basic sentence filter. This one rejects sentences that contain

the type of punctuation that would look strange on its own

in a randomly-generated sentence.

"""

sentence = sentence.text

reject_pat = re.compile(r"(^')|('$)|\s'|'\s|[\"(\(\)\[\])]")

# Decode unicode, mainly to normalize fancy quotation marks

if sentence.__class__.__name__ == "str":

decoded = sentence

else:

decoded = unidecode(sentence)

# Sentence shouldn't contain problematic characters

if re.search(reject_pat, decoded): return False

return True

def generate_corpus(self, text):

"""

Given a text string, returns a list of lists; that is, a list of

"sentences," each of which is a list of words. Before splitting into

words, the sentences are filtered through `self.test_sentence_input`

"""

sentences = self.sentence_split(text)

passing = filter(self.test_sentence_input, sentences)

runs = map(self.word_split, sentences)

print(runs[0])

return runs

# Generated the model

model = TaggedText(corpus)

# A sentence based on the model

print(model.make_sentence())

This post is work in progress. I'll continue adding to it when I get a chance.